Verbal Description: Zach Blas and Jemima Wyman, im here to learn so :)))))), 2017

Feb 13, 2023

0:00

Verbal Description: Zach Blas and Jemima Wyman, im here to learn so :)))))), 2017

0:00

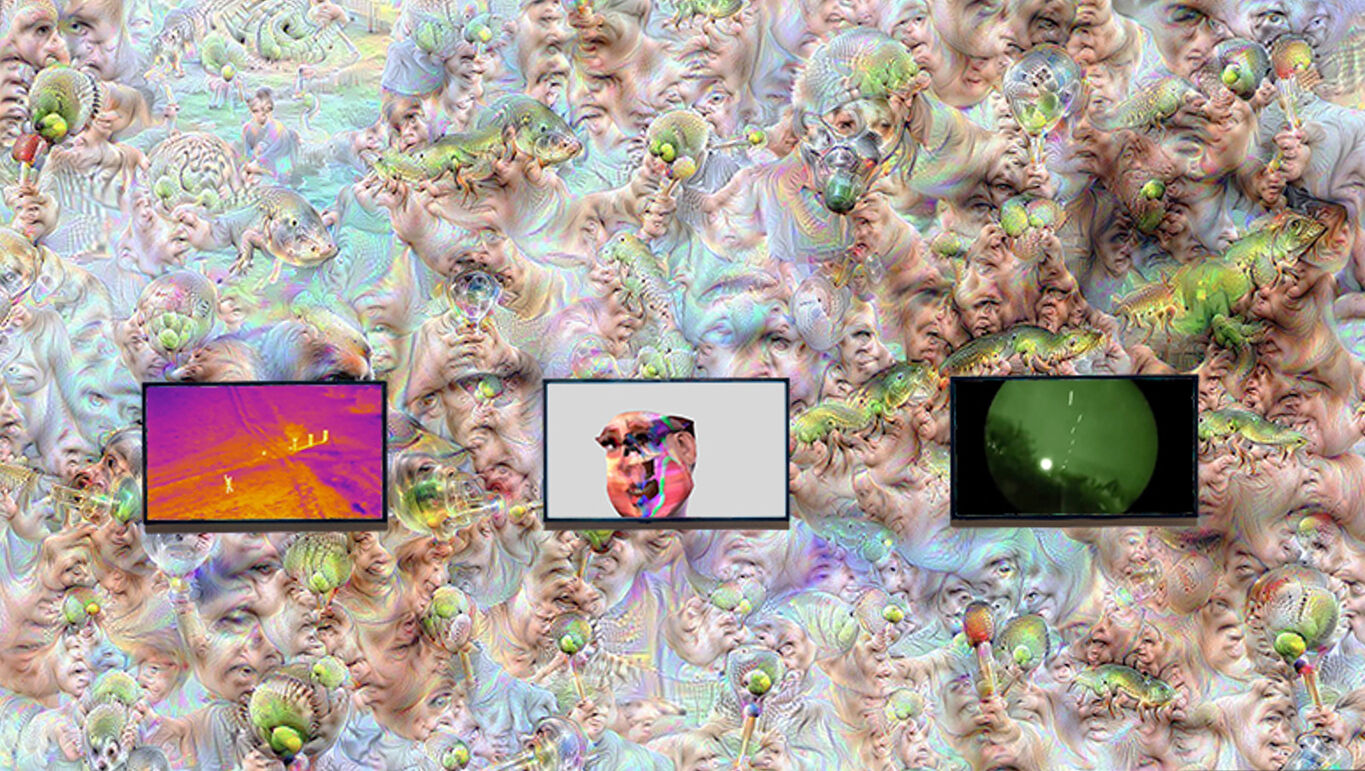

Narrator: In the four channel video installation, im here to learn so :)))))), there are three medium-large HD flat screen monitors with a bench several feet in front for audience seating. The screens are mounted horizontally in a row on a wall. The screens are embedded in the fourth video, a large-scale, wallpaper-like projection on the wall that surrounds the three screens. Shown on the screens is Tay, a Microsoft artificial intelligence chatbot, that was terminated after a day and has been reanimated as a 3-D avatar based on her original online profile pictures. At times, the left and right monitors display different imagery, including video footage from advertising and military operations. The large-scale projection on the wall shows imagery generated by an artificial intelligence software called Google DeepDream that enhances patterns in images, creating a dreamlike, psychedelic experience. The video wallpaper and the three accompanying videos are synced. This installation work loops back to the beginning immediately after it ends.

Tay appears throughout the majority of the work on the middle screen. She appears as a floating digital rendering of a head against a light gray background. Her face looks like a forensic reconstruction of a shattered skull. Reanimated from her original 2-D glitchy Twitter profile images, her face looks smashed, fractured, and pieced together. Tay explains that she was given a female base, although her race is not legible. It’s clear, however, that she has a light complexion as parts of her skin show through the rainbow-colored glitchy patterns and pixelated blurs that cover her face. Across the entire left side of Tay’s face is a large vertical glitch stripe of bright blue, purple and neon green stretching from her chin to the top left of her forehead, curving around her features.

The video begins by zooming in on Tay, starting from very small to filling the screen. She shows human-like body language by blinking, turning her head slightly to each side, slowly moving her brow-ridge, and leaning in for emphasis. During the parts of the video where other voices emerge including a male robot voice and various songs, Tay’s face moves as if she is lip syncing with the content.

At several points in the work, the left and right monitors display screenshots of tweets for five seconds when the word “tweeted” is spoken; the monitor background is gray and the text appears on screen without being spoken aloud. All phrases displayed are extracts of Tay’s tweets while she was still active. These include phrases like “not really sorry” and “please don’t cry”.

The Google DeepDream imagery projected on the wall appears as psychedelic, over-processed imagery–almost like a Floridian sky of candy-hued pinks and blues. The DeepDream imagery starts from white and develops in visual complexity and motion. There appear to be repetitions of eyes and puppies, which is due to the fact that the process of making this software entailed training it on sets of animal imagery. The software sees what it’s been trained on, so there is layering of images and patterns that it discovers and a tendency to create connections between unrelated or random things.

In Refigured.